At best, "artificial intelligence" as we currently understand it is a misnomer. Although artificial.

AI is not intelligent in the slightest. How to get started with machine learning and AI . It continues to be one of the most popular subjects in business and has rekindled interest in academia. This is nothing new; during the past 50 years, the world has experienced a number of highs and lows in terms of AI. But what distinguishes the recent wave of AI accomplishments from earlier ones is that powerful computing gear is now available that can finally completely realize some outlandish concepts that have been floating around for a while.

When what we now refer to as artificial intelligence first emerged in the 1950s, there was a disagreement about the best name for the discipline. The topic should be called "complex information processing," according to Herbert Simon, a co-creator of the General Problem Solver and the logic theory computer. This doesn't communicate the same sense of wonder that "artificial intelligence" does, nor does it suggest that machines can think similarly to humans.

The more accurate term for what artificial intelligence actually is, though, is "complex information processing," which refers to the process of dissecting complex data sets and making assumptions from the resulting mass. Systems that identify what's in a picture, suggest what to buy or watch next, and recognize speech (in the form of virtual assistants like Siri or Alexa) are some examples of AI in use today. All of these instances fall short of human intelligence, but they nonetheless demonstrate that with enough information processing, we are capable of amazing feats.

It doesn't matter if we call this field "complex information processing," "artificial intelligence," or the more menacing-sounding "machine learning." Many hours of labor and human creativity have gone into creating some very amazing applications. Take GPT-3, a deep-learning model for natural languages, as an illustration. It can produce text that is identical to material produced by a person, but it can also hilariously go wrong. It is supported by a neural network model that simulates human language with more than 170 billion parameters.

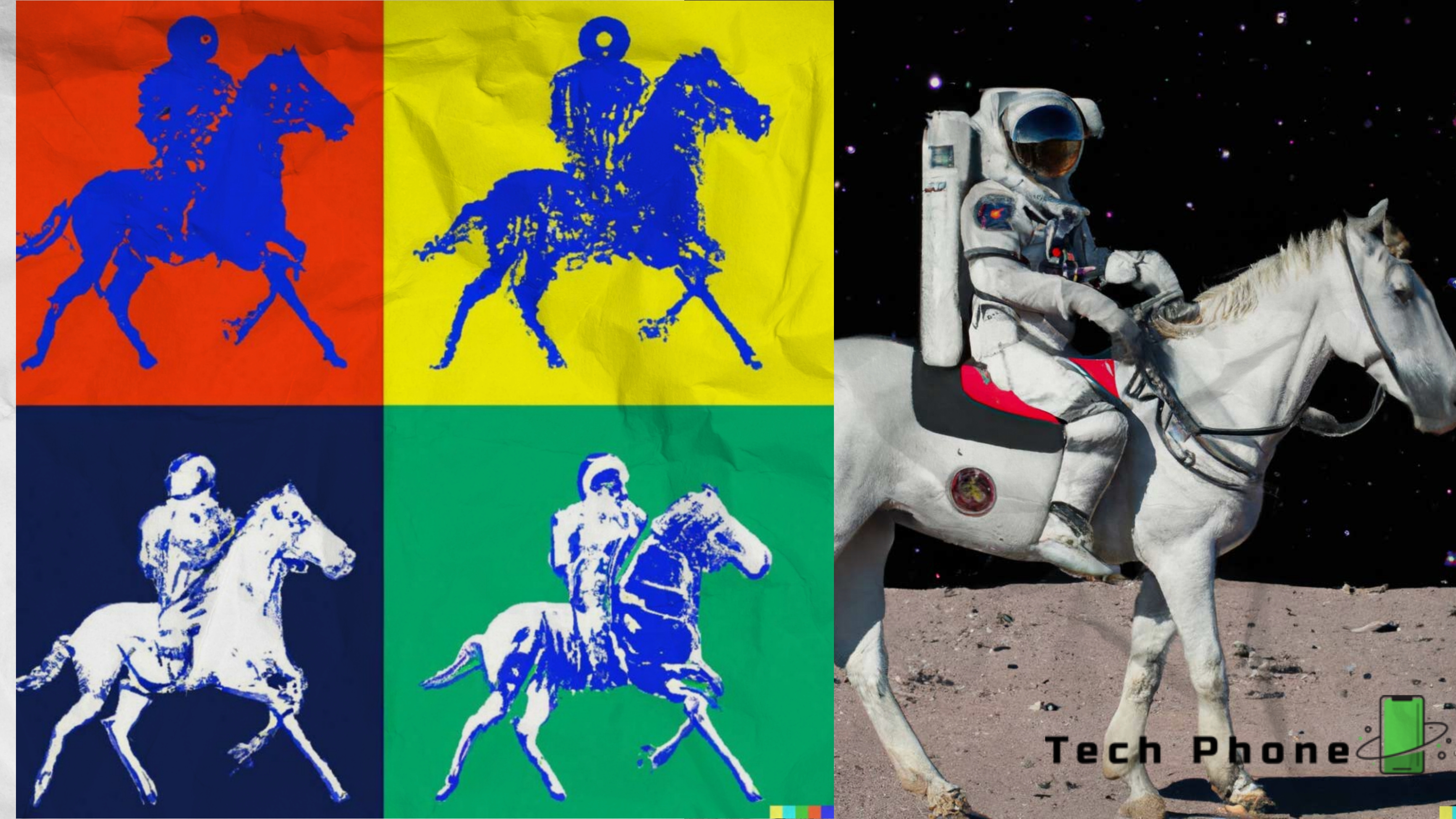

The Dall-E tool, which is built on top of GPT-3, can create an image of any fantasy object a customer asks. You can go much further with the tool's revised 2022 version, Dall-E 2, because it has the ability to "understand" highly abstract styles and concepts. For instance, when asked to picture "an astronaut riding a horse in the style of Andy Warhol," Dall-E will come up with a variety of pictures like these:

Dall-E 2 builds an image based on its internal model rather than searching Google for an analogous image. This new artwork was created entirely using math.

Not every AI application is as innovative as these. Nearly every industry is using AI and machine learning. Machine learning is rapidly turning into a necessity in a wide range of sectors, powering anything from recommendation engines in the retail business to pipeline safety in the oil and gas sector to diagnostics and patient privacy in the healthcare sector. There is a high demand for accessible, affordable toolsets because not every business has the means to develop products like Dall-E from the ground up.

There are similarities between the difficulty of meeting that demand and the early years of commercial computing, when computers and computer programs were swiftly evolving into the technology that businesses need. Even if not every company needs to create the next operating system or programming language, many do want to capitalize on the potential of these cutting-edge fields of research and require tools of a similar nature.

Overview of the tool landscape

What steps are involved in creating an ML/AI model in the modern era? Dr. Ellen Ambrose, the director of AI at the health care start-up Protenus in the Baltimore area, spoke with Ars about how to create a new ML model. According to her, there are three main components that go into the development of a new model: "25% asking the appropriate question, 50% data exploration and cleaning, feature engineering, and feature selection, [and] 25% training and testing the model." Although having access to so much data is helpful, there are still several questions you should research before using it.

Ambrose contends that businesses must be aware of the issues that machine learning may help them address as well as, more crucially, the questions that their data can help them answer.

Although the technology at your disposal can't always tell you what questions to ask, it can assist a team in data exploration and then in the training and assessment of a specific ML model.

Currently, several businesses sell software packages that enable teams or individuals to build an artificial intelligence or machine learning model without having to come up with a solution from scratch. There are numerous freely accessible libraries that enable developers to use machine learning in addition to these all-in-one packages. In reality, a developer, data scientist, or data engineer would not be starting from scratch in the great majority of machine learning systems.

Training

The model needs to be configured when a team has decided which questions to ask and whether the data is sufficient to provide answers. The balance of your data will be utilized as the foundation for training your model, while a portion of it will need to be set aside as a "verification set" to help ensure your model is performing as it should.

This seems simple enough on the surface: Set aside a chunk of your data and keep it hidden from the training portion so you may use it for validation in the future. But things may easily spiral out of control. What proportion is appropriate here? If the event you wish to model is extremely infrequent, what then? How should the data be divided up as both the training and validation data sets will require some information from that event? Although configuration is still a crucial step to get right, AI/ML technologies can help decide how to break down this divide and possibly resolve fundamental issues with your data.

The next dilemma is which kind of machine learning system to employ: gradient boosted forest, support vector machine, or neural network. There is no perfect solution, and all approaches are equally effective—at least theoretically. But if we put theory aside and focus on the real world, some algorithms are simply better suited for some tasks, as long as you're not seeking for the absolute best outcome. The user would be able to select the kind of algorithm they wish to utilize internally with a good AI/ML modeling tool. The trick is to handle all the arithmetic and make this kind of system accessible to regular developers.

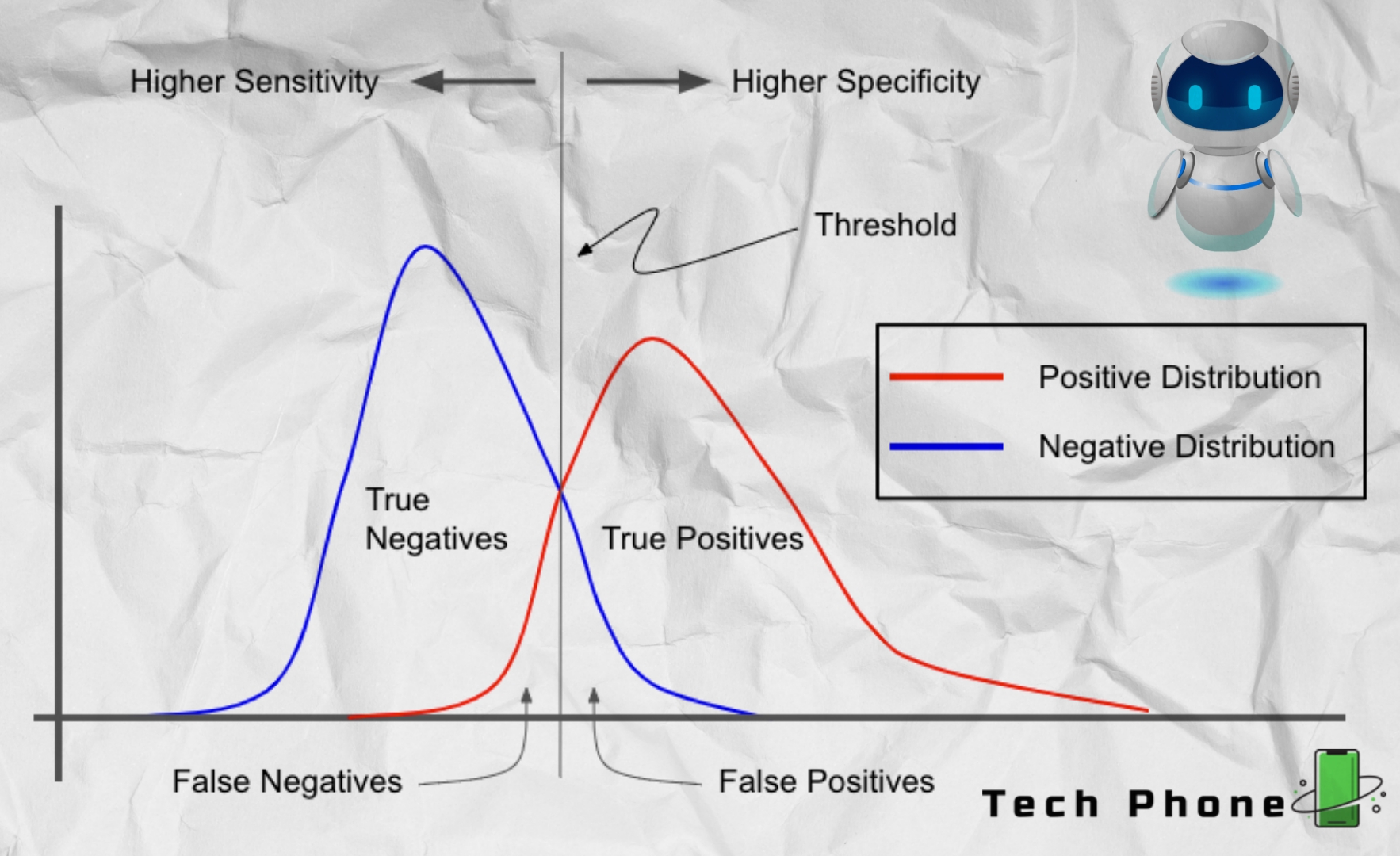

You can attempt to balance the sensitivity and specificity of your model if you have a set of data to train with, a collection of data to try to verify your model with, and an underlying algorithm. You will need to decide whether a condition is true or false at some point during the development of your model, as well as whether a number is above or below a specified mathematical threshold. These are binary options, but reality is more complicated than that. There are four possible outputs of your model since the real world and the data it generates are messy: a true positive, a false positive, a true negative, and a false negative.

Only real positives and true negatives would be reported by a perfect model, however this is frequently not mathematically feasible. Therefore, it is crucial to strike a balance between sensitivity (the number of true positives) and specificity (the number of genuine negatives).

Even while performing all of this by hand and repeatedly retraining and retesting is not prohibited, the addition of a pleasant graphical tool can make the work more manageable. For the seasoned developers out there, consider the difference between using a full IDE-based debugger now and using a debugger on the command line 25 years ago.

Deployment

Once a model is created and trained, it can perform the tasks for which it was designed, such as recommending what to buy or watch next, locating cats in images, or calculating home prices. However, your model must be deployable in order to make things happen. Model deployment can be done in a variety of methods, each tailored to the needs and usage context of your model.

It goes without saying that Docker and other comparable containerization technologies are well-known to those who are familiar with contemporary IT and cloud environments. With this kind of technology, a fully trained model may be accessed via the cloud from any location, together with a small web server running inside a portable container. Information can be passed to the model via standard web queries (or other equivalent external calls), with the assumption that the answer will include the results ("this is a cat" or "this is not a cat").

With this approach, the trained model can exist independently and be reused or deployed in a known environment. However, as data changes often in a dynamic, real-world setting, a growing number of businesses are now trying to install and manage models, track their efficacy, and offer everything required to create a full "ML life cycle." Machine learning operations are known as "MLOps" in this sector. (Consider "devops," but with an emphasis on this constrained ML life cycle rather than the more extensive SDLC.)

Python notebooks are essential tools for engineers and data scientists. Scientists, engineers, and developers can communicate their work in a manner that can be read and used through a web browser thanks to these blends of code and markup. This technology makes it simple for users to download or import a trained model with only one Python call, and libraries and systems are widely accessible.

Let's say you wish to compare two sentences to see how similar they are. A developer can download a trained TensorFlow model to ascertain how similar two words are without needing access to the complete training set of data necessary to produce such a model with a few lines of code, which eliminates the need to train and build a full natural language processor model.

In model zoos, collections of trained ML/AI models are combined and made publicly available, enabling independent users to take advantage of the work that larger organizations have put into training a model. A trained model that handles the labor-intensive image identification and path prediction, for instance, could be used by a developer interested in tracking people's activity in a certain area. The developer would not have to worry about the specifics of how the model was created and trained and could instead use a particular business logic to create value from an idea.

Dr. Ambrose also presented an alternative approach to model deployment and sharing. According to her, you can "store a persisted version of a trained model along with its information in a [known file] format" by decomposing a model and its parameters. An ML model can be "exported" and packaged in a form that makes it portable but still useful as it is actually simply a set of fixed mathematical equations. For instance, a neural network model is nothing more than a sizable collection of linear equations with a sizable number of parameters. The precise figure depends on the inputs and specifics of each network tier.

There are other forms for this kind of representation, including zip-based Spark ML pipeline representation and predictive model markup language (an XML-based schema). Fully trained models can be shared and distributed using these files across individuals or workflows. The information stored in these files allows the other user or program to recreate the fully trained setup as long as it is aware of the correct underlying model.

From theoretical to practical

Artificial intelligence and machine learning will become more widely used as they become more practical. This article ought to be sufficient to provide you with a fundamental understanding of the systems, or at the very least, to offer you a ton of open browser tabs to read through.

If you're interested in learning more about building, testing, and using AI models, keep an eye out for Ars' upcoming series on the topic. We'll be applying the knowledge we gained from our natural language processing experiment from the previous year to a variety of new situations, and we hope you'll join us for the voyage.

Comments

Post a Comment